WASHINGTON — Instagram is testing new ways to verify the age of people using its service, including a face-scanning artificial intelligence tool, having mutual friends verify their age or uploading an ID.

But the tools won't be used, at least not yet, to try to keep children off of the popular photo and video-sharing app. The current test only involves verifying that a user is 18 or older.

The use of face-scanning AI, especially on teenagers, raised some alarm bells Thursday, given the checkered history of Instagram parent Meta when it comes to protecting users' privacy. Meta stressed that the technology used to verify people's age cannot recognize one's identity — only age. Once the age verification is complete, Meta said it and Yoti, the AI contractor it partnered with to conduct the scans, will delete the video.

Meta, which owns Facebook as well as Instagram, said that beginning on Thursday, if someone tries to edit their date of birth on Instagram from under the age of 18 to 18 or over, they will be required to verify their age using one of these methods.

Meta continues to face questions about the negative effects of its products, especially Instagram, on some teens.

Kids technically have to be at least 13 to use Instagram, similar to other social media platforms. But some circumvent this either by lying about their age or by having a parent do so. Teens aged 13 to 17, meanwhile, have additional restrictions on their accounts — for instance, adults they are not connected to can't send them messages — until they turn 18.

The use of uploaded IDs is not new, but the other two options are. “We are giving people a variety of options to verify their age and seeing what works best,” said Erica Finkle, Meta's director of data governance and public policy.

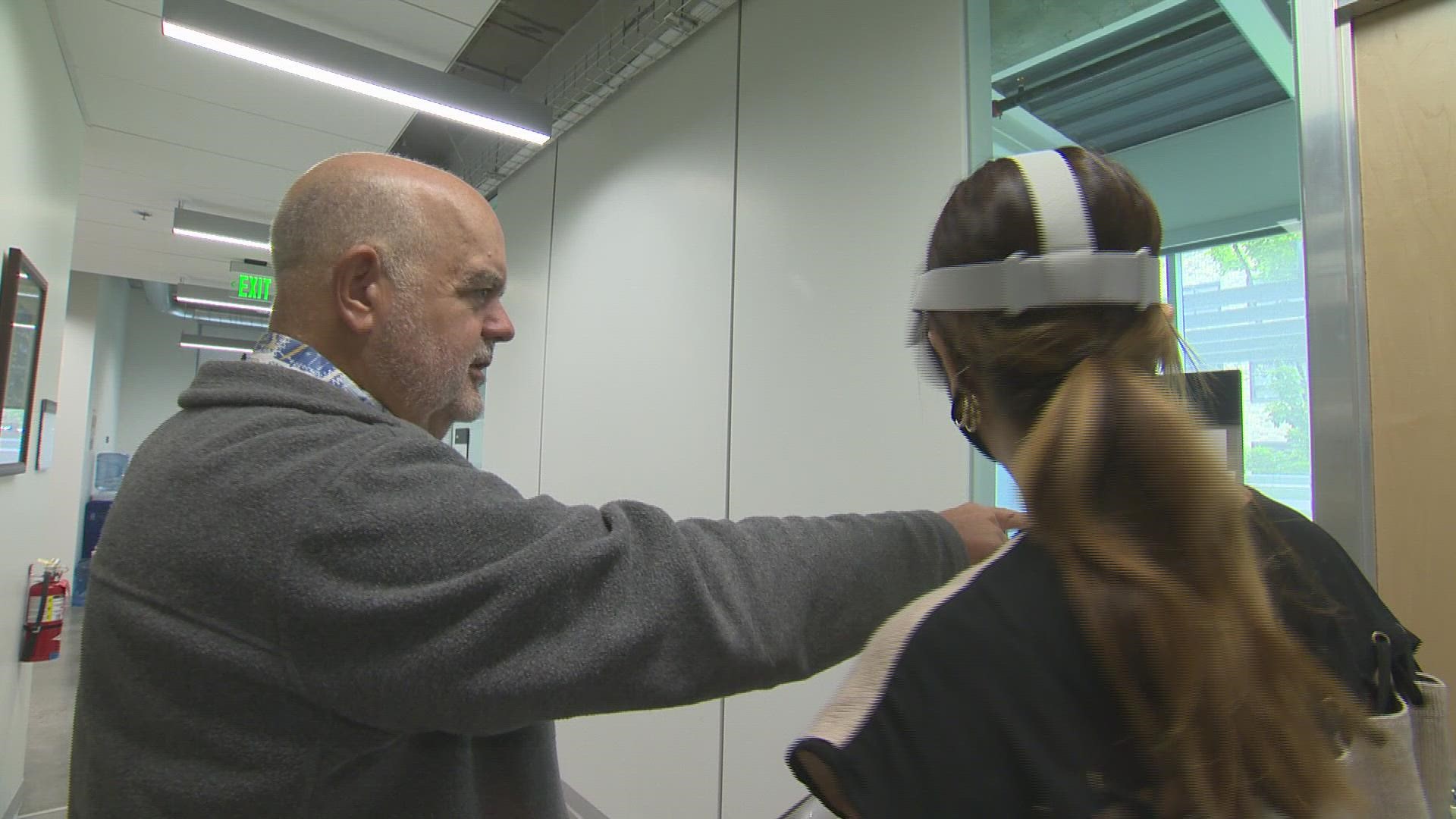

To use the face-scanning option, a user has to upload a video selfie. That video is then sent to Yoti, a London-based startup that uses people's facial features to estimate their age. Finkle said Meta isn't yet trying to pinpoint under-13s using the technology because it doesn't keep data on that age group — which would be needed to properly train the AI system. But if Yoti does predict a user is too young for Instagram, they'll be asked to prove their age or have their account removed, she said.

“It doesn’t ever recognize, uniquely, anyone,” said Julie Dawson, Yoti’s chief policy and regulatory officer. “And the image is instantly deleted once we’ve done it.”

Yoti is one of several biometric companies capitalizing on a push in the United Kingdom and Europe for stronger age verification technology to stop kids from accessing pornography, dating apps and other internet content meant for adults -- not to mention bottles of alcohol and other off-limits items at physical stores.

Yoti has been working with several big U.K. supermarkets on face-scanning cameras at self-check-out counters. It has also started verifying the age of users of the youth-oriented French video chatroom app Yubo.

While Instagram is likely to follow through with its promise to delete an applicant’s facial imagery and not try to use it to recognize individual faces, the normalization of face-scanning presents other societal concerns, said Daragh Murray, a senior lecturer at the University of Essex’s law school.

“It’s problematic because there are a lot of known biases with trying to identify by things like age or gender,” Murray said. “You’re essentially looking at a stereotype and people just differ so much.”

A 2019 study by a U.S. agency found that facial recognition technology often performs unevenly based on a person’s race, gender or age. The National Institute of Standards and Technology found higher error rates for the youngest and oldest people. There's not yet such a benchmark for age-estimating facial analysis, but Yoti’s own published analysis of its results reveals a similar trend, with slightly higher error rates for women and people with darker skin tones.

Meta’s face-scanning move is a departure from what some of its tech competitors are doing. Microsoft on Tuesday said it would stop providing its customers with facial analysis tools that “purport to infer” emotional states and identity attributes such as age or gender, citing concerns about “stereotyping, discrimination, or unfair denial of services.”

Meta itself announced last year that it was shutting down Facebook's face-recognition system and deleting the faceprints of more than 1 billion people after years of scrutiny from courts and regulators. But it signaled at the time that it wouldn't give up entirely on analyzing faces, moving away from the broad-based tagging of social media photos that helped popularize commercial use of facial recognition toward "narrower forms of personal authentication.”